Preface

This manual describes the features and operation of the Hyrax data server, a data server developed by OPeNDAP, Inc. as a reference server for the Data Access Protocol, versions 2 and 4. the Hyrax server is modular software with a number of handlers that are loaded into a core framework based on the contents of configuration files. Each of the server’s modules provides a distinct functional capability, such as reading data from a certain kind of file, encoding data, or processing data in different ways.

The text contained here was built up over several years as modules were added to the system. Originally, the documentation was built using a Wiki (because it was a collaborative writing tool for a distributed group of people), where each component had a separate page. Over time, as information was spread across many web pages and the Wiki, this became unmanageable. We hope this new format reads more like a guide for people who want to install and configure the server and less like a design document.

Development Sponsored By

Acknowledgments

The High Altitude Observatory at NCAR contributed the BES framework that is the basis for the server’s data processing engine and modular extensibility.

Keith Seyffarth extracted the Wiki’s text that forms the basis of this manual, and Alexander Porrello and Leonard Porrello edited the text.

1. Hyrax New Features (1.17.1)

The new release of Hyrax contains many improvements to the DMR++ build, generation and testing process, as well as a broader coverage for many of NASA’s HDF4 / HDF4-EOS2 / HDF5 datasets. In particular, the scripts to generate DMR++ have major improvements in performance, with better testing, checks, and improved documentation on how to generate the DMR++ files and map the variables inside these files to the mature and broadly used OPeNDAP DAP4 protocol.

In this new version of Hyrax, DMR++ now supports a wide range of HDF5, HDF4, and HDF4-EOS2 features, allowing for direct data access in the cloud for many of NASA’s datasets. This means that DMR++ files can be generated for a wide range of NASA’s datasets, including those that may have missing grid information. The DMR++ software includes better support for DAP4, including mapping HDF4 Grids to DAP4 Groups and dimensions. When CF grid variables are missing, these grid variables are generated and embedded in the DMR++ . In addition to more complete support for these archival data file formats, various optimizations to the dmrpp generator have been included to improve the generation, checks, and testing of DMR++ .

DMR++ increases its support for HDF5 by supporting (compressed) compound data types, arrays of strings, and subsetting of HDF5 compact arrays. In addition, there are various fixes to how DMR++ reads variable string data. Lastly, this release provides a fix to reported issues when building DMR++ from NetCDF-4 datasets with enable-CF option set true.

Some minor fixes to Hyrax’s HDF5 handler are incorporated in the new version, along with an improved testsuite. Hyrax’s HDF4 handler is greatly expanded to directly support the DAP4 protocol for NASA’s HDF4 and HDF4-EOS2 datasets. Previously, DAP4 support was available by translating the DAP2 objects, which meant important features of DAP4 were not available. This new 'native' DAP4 implementation continues to support CF grids/variables and the default options of the older software. Finally, the overall performance of the HDF4 handler has been improved resulting in shorter response times.

Various fixes are now incorporated to Hyrax to improve performance and support for DAP4 and DAP4 to DAP2 mappings for various NetCDF cases. Lastly, a better handling of BES Error types and exception cases and improvements to the BESLog has been implemented resulting in more meaningful error messages and timing data for BES commands.

For those with NASA access to JIRA, see the completed issue list for this release: NASA JIRA

1.1. Configuration and behavior updates

As of Hyrax 1.16.8 we have deprecated the following

DEPRECATED: <UseDAP2ResourceUrlResponse />.

Instead, we recommend to use

a). <DatasetUrlResponse type="…" /> ` to configure the type of response that the server will generate when a client attempts to access the unadorned Dataset URL. The type of response is controlled by the value of the type attribute.

where the default type above is `download

Allowed values:

-

download(default)

If the configuration parameterAllowDirectDataSourceAccessis set (present) then the source data file will be returned for the dataset URL. If the configuration parameterAllowDirectDataSourceAccessis not present then a 403 forbidden will be returned for the dataset URL. (This is basically a file retrieval service, any constraint expression submitted with the unadorned dataset URL will be ignored.) -

dsr.

The dap4 DSR response will be returned for the dataset URL.

| This setting is not compatible with DataRequestForm type of “dap2” as the DSR response URL collides with the DAP2 Data Request Form URL. |

-

requestForm

The Hyrax Data Request Form Page will be returned for the dataset URL. Which form is returned is controlled by the DataRequestForm configuration element

b) <DataRequestForm type="…" />

Defines the target DAP data model for the dataset links in the “blue-bar” catalog.html pages. These links point to the DAP Data Request Form for each dataset. This element also determines the type of Data request form page returned when the DatasetUrlResponse type=”requestForm” and the request is for the Dataset URL

Allowed values: dap2 or dap4

c) <AllowDirectDataSourceAccess />

When enabled users will be able to use Hyrax as a file server and download the underlying data files/granules/objects directly, without utilizing the DAP APIs.

* default: disabled

d) <ForceDataRequestFormLinkToHttps />

The presence of this element will cause the Data Request Form interfaces to “force” the dataset URL to HTTPS. This is useful for situations where the sever is sitting behind a connection management tool (like AWS CloudFront) whose outward facing connections are HTTPS but Hyrax is not using HTTPS. Thus the internal URLs being received by Hyrax are on HTTP. When these URLs are exposed via the Data Request Forms they can cause some client’s to have issues with session dropping because the protocols are not consistent.

Default: disabled

2. Overview

This section describes the installation, configuration, and operation of the Hyrax Data server, a data server that integrates structured data with the world wide web. Hyrax is one example of a number of data servers that implement OPeNDAP’s Data Access Protocol (DAP).

For information on how to get Hyrax downloaded and running, please see the Hyrax Downloading and Installation guide that appears later in this manual.

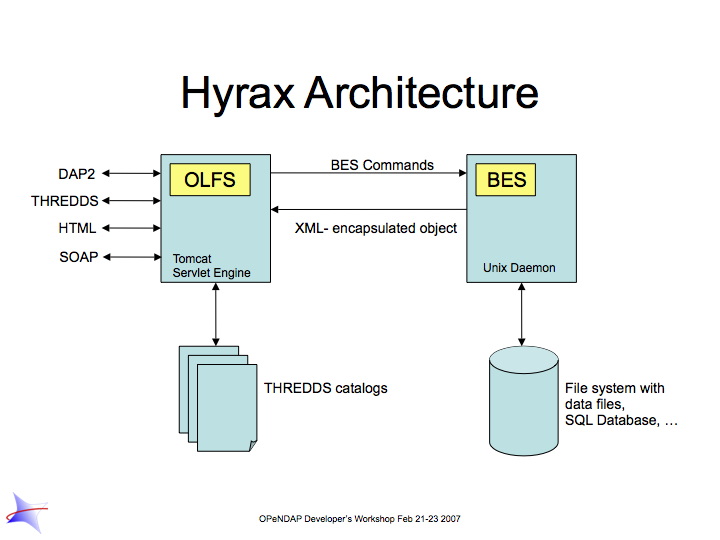

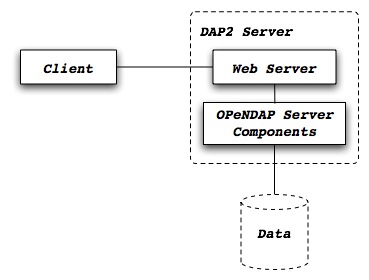

Hyrax uses the Java servlet mechanism to hand off requests from a general web daemon to DAP format-specific software. This provides higher performance for small requests. The servlet front end, which we call the OPeNDAP Lightweight Front end Server (OLFS) looks at each request and formulates a query to a second server (which may or may not on the same machine as the OLFS) called the Back End Server (BES).

The BES is the high-performance server software from HAO. It reads data from the data stores and returns DAP-compliant responses to the OLFS. In turn, the OLFS may pass these response back to the requestor with little or no modification, or it may use them to build more complex responses. The nature of the Inter Process Communication (IPC) between the OLFS and BES is such that they should both be on the same machine or able to communicate over a very high-bandwidth channel.

The OLFS and the BES will run and serve test data immediately after a default installation. Additional configuration is required for them to serve site specific data.

2.1. Features

-

DMR++ : DMR++ provides direct access to data in S3, and so Hyrax supports direct I/O transfers from HDF5 to NetCDF4 when using DMR++ . Hyrax can find the effective URL for a data item when it is accessed via a series of redirect operations, the last of which is a signed AWS URL. This is a common case for data stored in S3. In addition, the BES can sign S3 URLs using the AWS V4 signing scheme. Hyrax implements lazy evaluation of DMR++ files. This improves the efficiency/speed for requests that subset a dataset that contains a large number of variables as only the variables requested will have their Chunk information read and parsed.

-

EDL: EarthDataLogin Hyrax has extensitve support for Earthdata Login Authentication. For configurations that require Hyrax to authenticate remote resources, Hyrax can now utilize

~/.netrc(or anetrcfile may be specified insite.conf) and Hyrax will use the appropriate credentials. Moreover, Hyrax supports EDL token chaining and the handling of tokens is much improved so that redirected are not issues and session (cookie) management is not required of the client. -

THREDDS Catalog Support: Hyrax supports the THREDDS catalogs. It can serve user supplied static catalogs and it will dynamically generate THREDDS catalogs of it’s internal holdings.

-

Dataset Aggregation: Collections of related data resources can be collected into a single dataset using the aggregation features. Typically these are formed for geographic tiles, time series, etc.

-

Adding/modifying dataset content.: Datasets can be modified by the server without having to actually change the underlying files. These views are independently accessible from the original data. Both dataset metadata and data values may be added or changed.

-

Supports multiple source data formats: Server can ingest source data stored as HDF4, HDF4-EOS, HDF5, HDF5-EOS, NetCDF-3, NetCDF-4, CEDAR, FITS, Comma Separated Values, and raw ASCII and Binary formats. Because of Hyrax’s extensible design, it’s easy to add new source data formats.

-

Supports data retrieval in multiple return formats: Hyrax is able to return data in DAP, DAP4, NetCDF-3, NetCDF-4, JSON, CSV, and ASCII formats, Or, you can add your own response types.

-

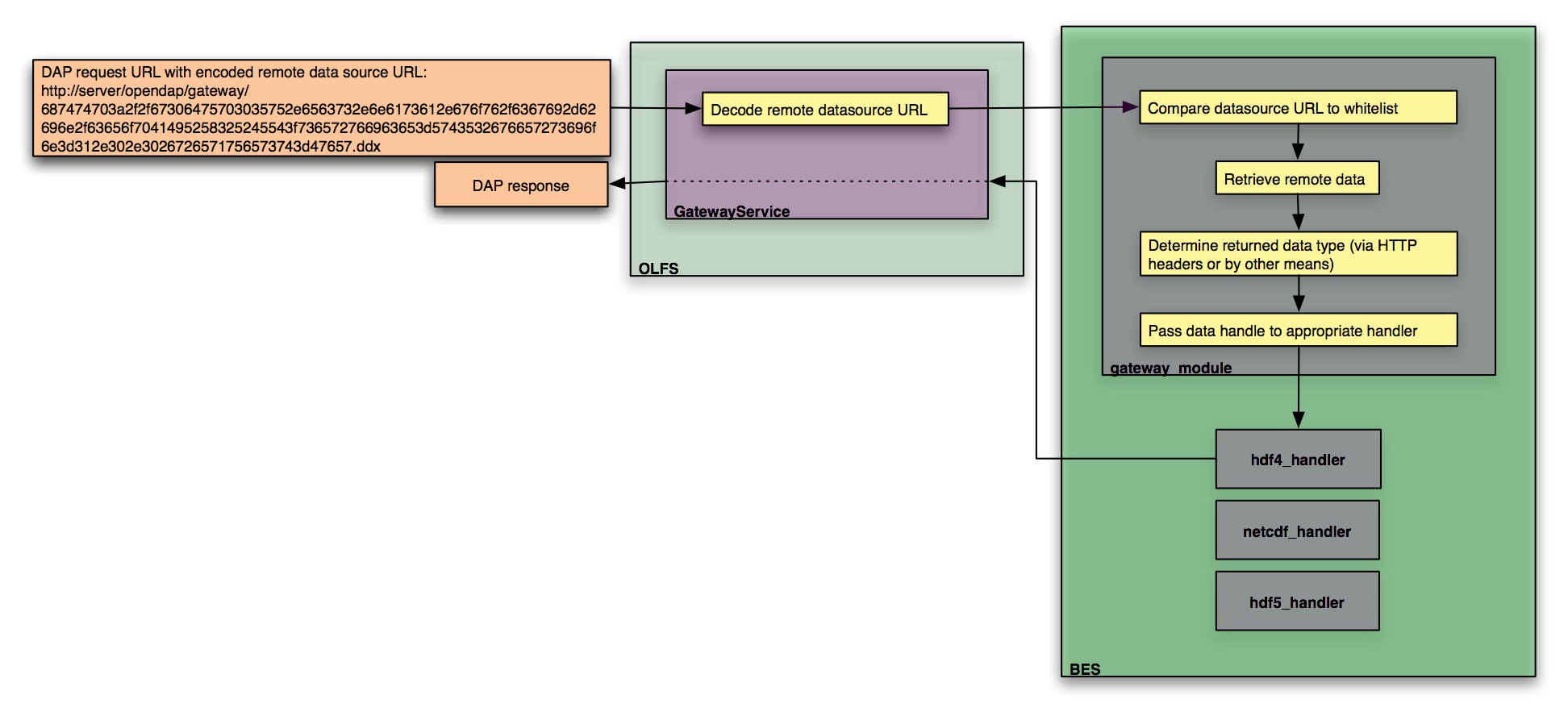

Gateway: Hyrax supports a gateway feature that allows it to provide DAP (and other Hyrax) services for remotely held datasets that are stored in any of Hyrax’s source data formats.

-

RDF: Hyrax provides RDF descriptions of it’s data holdings. These can enable semantic web tools to operate upon the metadata content held in the server.

-

Server Side Functions: Hyrax supports a number of Server side functions out of the box including (but not limited to):

-

geogrid: Subset applicable DAP Grids using latitude and longitude values.

-

grid: Subset any DAP Grid object using the values of it’s map vectors.

-

linear_scale: Apply a linear equation to the data returned, including automatic use of CF attributes.

-

version: The version function provides a list of the server-side processing functions available.

-

New ones are easy to add.

-

-

Extensible WebStart functionality for data clients: Hyrax provides WebStart functionality for a number of Java based DAP clients. It’s simple to add new clients to the list that Hyrax supports.

-

Extensible/Configurable web interface: The web interface for both Hyrax and the administrator’s interface can be customized using CSS and XSL. You can add your organizations logo and specialize the colors and fonts in the presentation of data sets.

-

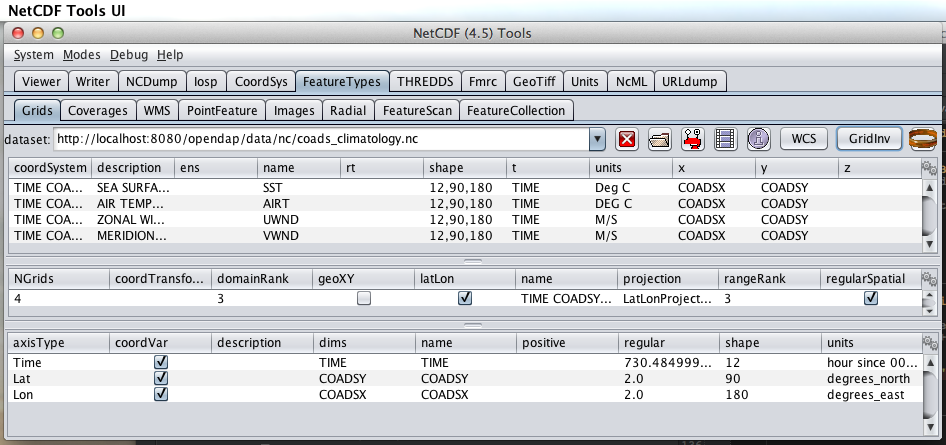

WMS services: Hyrax now supports WMS services via integration with ncWMS.

-

JSON responses: Both metadata and data are now available in a JSON encoding.

-

w10n: Hyrax comes with a complete w10n service stack. W10n navigation is supported through the default catalog where all datasets and "structure" variables appear as graph nodes. Data can be acquired for atomic types or arrays of atomic types in a number of formats.

-

Feature Request: If there is a feature you would like to see but don’t, let us know: support@opendap.org or opendap-tech@opendap.org. (You need to subscribe first.)

2.2. Modules

Hyrax has a number of modules that provide the actual functionality of the server: Reading data files, building different kinds of responses and performing different kinds of server processing operations. Most of these modules work with the BES but some are part of the front (web facing) part of the server.

2.2.1. BES modules

-

Gateway handler (Interoperability between Hyrax and other web services)

-

SQL handler (Unsupported)

Additional Java Modules that use the BES

-

WMS - Web Mapping Service via integration with ncWMS.

Reference Documentation

2.3. Contact Us

We hope you find this software useful, and we welcome your questions and comments.

Technical Support:

-

opendap-tech@opendap.org (You need to subscribe first.)

3. Installation

Hyrax is a data server that implements the DAP2 and DAP4 protocols, works with a number of different data formats and supports a wide variety of customization options from tailoring the look of the server’s web pages to complex server-side processing operations. This page describes how to build the server’s source code. If you’re working on a Linux or OS/X computer, the process is similar so we describe only the linux case; we do not support building the server on Windows operating systems.

There are broadly three ways to install and run Hyrax Data Server

3.1. Docker Installation (Recommended)

This is the simplest way to install and use the latest release of Hyrax.

3.1.1. Prerequisites

-

Docker daemonprocess is running in the background. -

You have a data folder. In this guide, we will assume it is

~/tmp/data/. -

Your data is stored in HDF5/NetCDF4 format, csv, or any other file format for which Hyrax has a data Handler (see all supported file formats by Hyrax)

-

OSX or Linux platform.

3.1.2. Run Hyrax and serve data

-

Open a terminal and download hyrax via DockerHub

You have two options. To download the latest official release of Hyrax data server run:

docker pull opendap/hyrax:latestThis will install the version of Hyrax as described on the official Hyrax data server page. The official releases are labor intensive to produce and only happen once or twice a year.

You can also download the absolute most recent version of Hyrax data server, i.e. that associated with the latest merged commit to the Master branch on Github, by running:

docker pull opendap/hyrax:snapshotBoth of the options above are fully tested using Travis for CI/CD, and pack with the correct versions needed to build Hyrax.

-

Run Hyrax and make your data available on port

8080

Say, in the case you installed the latest using DockerHub,

docker run -d -h hyrax -p 8080:8080 \

--volume ~/tmp/data:/usr/share/hyrax \

--name=hyrax opendap/hyrax:latest

If you are on an OSX system running on Apple silicon (M-series CPUs) docker deployment, you will need the following extra line: --platform linux/amd64 \.

|

The command above identifies the location of your data volume (~/tmp/data) and assigns it to /usr/share/hyrax, which is where Hyrax looks for data in the docker container.

-

Check data is available on local host

By now, you can paste onto any browser the following url to see Hyrax’s landing page

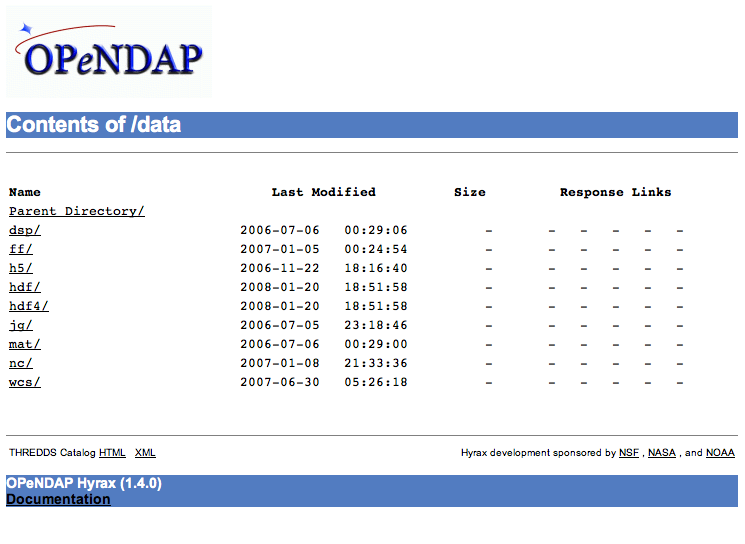

http://localhost:8080/opendap/hyraxMake sure all your data is available and try to download some of it.

The installation of Hyrax comes with various default configutations. If you want to learn how to change the various default configurations, check the overview on the Configuration

3.2. (pre-compiled) Binaries

Prerequisites:

-

Java>=11 -

Tomcat>=9

Installing a Hyrax binary release typically involves the following steps

-

Download the latest Hyrax release (Hyrax 1.17-1). It is composed of:

-

2 RPM files (one for

libdap, one for theBES). -

The OLFS binary distribution file. You can also install the

OLFS Automatic robots.txt, if available.

-

-

Install the

libdapRPM. -

Install the

BESRPM. -

Unpack the

OLFSdistribution file, and install theopendap.warfile into your Tomcat instance’swebappsdirectory. -

(optional)

ncWMS2. You will need to use the EDAL web page to locate the latestncWMS2“Servlet Container” software bundle as a WAR file. Install it into the same Tomcat instance as the OLFS. See here for more instructions.

| The detailed download and installation instructions for Hyrax are published on the download page for each release of the server. Find the latest release and its associated installation details on the Hyrax downloads page. |

3.2.1. BES Installation

Download

It is necessary that you download and install both the libdap and BES binaries.

-

Visit the official OPeNDAP website and go to Latest Release.

-

Scroll down the following page until you reach the section entitled Linux Binaries, then continue scrolling until you see the heading titled BES.

-

You need to download both the

libdapandBESRPMs which should be namedlibdap-x.x.xandbes-x.x.x. -

The downloaded files should be named something like

libdap-x.x.x.el6.x86_64.rpmandbes-x.x.x.static.el6.x86_64.rpm.

| In order to install the RPMs on your system, you must be running a 64bit OS. If you are running 32bit OS, attempting to install the libdap and BES RPMs will result in errors. |

Install

-

Install the libdap and bes RPMs:

sudo yum install libdap-3.x.x.rpm bes-3.x.x.rpm -

Test the

BES:-

start it:

sudo service besd start

(Or use the script in /etc/init.d with sudo: /etc/init.d/besd start) -

connect using a simple client:

bescmdln -

get version information:

BESClient> show version -

exit from

bescmdln:

BESClient> exit

-

| If you are upgrading to Hyrax 1.13.4 or newer from an existing installation older than 1.13.0, in the bes.conf file the keys BES.CacheDir, BES.CacheSize, and BES.CachePrefix have been replaced with BES.UncompressCache.dir, BES.UncompressCache.size, and BES.UncompressCache.prefix respectively. Other changes include the gateway cache configuration (gateway.conf) which now uses the keys Gateway.Cache.dir, Gateway.Cache.size, and Gateway.Cache.prefix to configure its cache. Changing the names enabled the BES to use separate parameters for each of its several caches, which fixes the problem of 'cache collisions.' |

3.2.2. OLFS Installation

Introduction

The OLFS comes with a default configuration that is compatible with the

default configuration of the BES. If you perform a default installation

of both, you should get a running Hyrax server that will be pre-populated

with test data suitable for running integrity tests.

Install Tomcat

-

Install tomcat.noarch:

sudo yum install tomcat.noarch. -

Create the directory

/etc/olfs, change its group to tomcat, and set it group writable:mkdir /etc/olfs chgrp tomcat /etc/olfs chmod g+w /etc/olfs)

Alternatively, get Apache Tomcat-8.x from the Apache Software Foundation and install it wherever you’d like—for example,

/usr/local/.

Download

Follow the steps below to download the latest OLFS distribution:

-

Visit the official OPeNDAP website and go to Latest Release.

-

Scroll down the following page until you reach the section entitled Linux Binaries

-

Directly underneath, you should see the

OLFSdownload link, named something likeOLFS_x.x.x._Web_Archive_File. Click to download. -

The downloaded file will be named something like:

olfs-x.x.x-webapp.tgz.

Unpack

Unpack the jar file with the command

tar -xvf olfs-x.x.x-webapp.tgz

This will unpack the files directory called olfs-x.x.x-webapp.

Install

Inside of the olfs-x.x.x-webapp directory, locate opendap.war and copy it into

Tomcat’s webapps directory:

cp opendap.war /usr/share/tomcat/webapps

Or, if you installed tomcat from the ASF distribution, its web application directory, for example…

/usr/local/apache-tomcat-8.5.34/webapps

CentOS-7/SELinux and Yum installed Tomcat

Recent versions of CentOS-7 are shipped with default SELinux settings that prohibit Tomcat from reading or opening the opendap.war file. This can be addressed by issuing the following two commands:

sudo semanage fcontext -a -t tomcat_var_lib_t /var/lib/tomcat/webapps/opendap.war sudo restorecon -rv /var/lib/tomcat/webapps/

After this you will need to restart Tomcat:

sudo service tomcat restart

Starting and Stopping the OLFS/Tomcat

If you followed this tutorial and are using a YUM-installed Tomcat,

it should already be integrated into the system with a tomcat entry

in /etc/init.d and you should be able to…

-

Start Tomcat:

sudo service tomcat start -

Stop Tomcat:

sudo service tomcat stop

You can verify that the server is running by visiting http://localhost:8080/opendap/. If you have installed Hyrax on a virtual machine, replace localhost with the virtual machine’s IP address.

If you are installing the OLFS in conjunction with ncWMS2 version 2.0 or higher, copy both the opendap.war and the ncWMS2.war files into the Tomcat webapps directory. (Re)Start Tomcat.

|

If you are upgrading Hyrax from any previous installation older than 1.16.5, read this! The internal format of the olfs.xml file has been revised. No previous version of this file will work with Hyrax >=1.16.5. In order to upgrade your system, move your old configuration directory aside (mv /etc/olfs ~/olfs-OLD) and then follow the instruction to install a new OLFS. Once you have it installed and running you will need to review your old configuration and make the appropriate changes to the new olfs.xml to restore your server’s behavior. The other OLFS configuration files have not undergone any structural changes and you may simply replace the new ones that were installed with copies of your previously working ones.

|

To make the server restart with when host boots, use systemctl enable besd and systemctl enable tomcat, or chkconfig besd on and chkconfig tomcat on depending on the specifics of your Linux distribution.

|

3.3. Hyrax GitHub Source Build

If you would like to build Hyrax from source code, you can get signed source distributions from the download page referenced above. In addition, you can get the source code for the server from GitHub, either using the Hyrax project or by following the directions on our developer’s wiki.

3.3.1. Installing Hyrax in Developer Mode

If you are interested in working on Hyrax, we maintain a wiki with a section devoted to Developer Information specific to our software and the development process. You can find information there about developing your own modules for Hyrax.

3.4. WCS Installation

The WCS 2 service comes bundled as part of Hyrax-1.14.0 and newer. For more information about configuring WCS with your installation of Hyrax, please refer to the WCS Installation Guide that appears later in this document.

4. Configuring and Customizing Hyrax

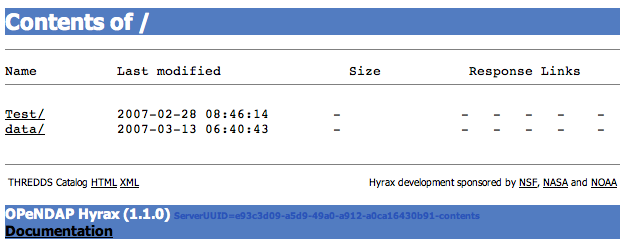

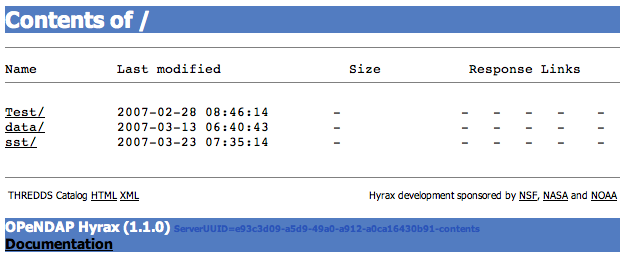

When you install Hyrax for the first time it is pre-configured to serve test data sets that come with each of the installed data handlers. This will allow you to test the server and make sure it is functioning correctly. After that you can customize it for your data.

4.1. BES Configuration

When you launch your Hyrax Data Server, the BES loads the bes.conf located in $prefix/etc/bes/, which instructs to read all the configuration files defined for each module, located in $prefix/etc/bes/modules/. There are 27 configuration files! For example, the $prefix/etc/bes/modules/h5.conf file declares all configuration options for the HDF5 handler.

By default $prefix is in /usr/local in Linux, or simply / if you followed the docker installation.

|

The last final directive in the bes.conf file is to read the $prefix/etc/bes/site.conf file, if it exists. And so, when the default configurations do not suit your needs, or that of your data users, the configuration of the BES can be customized by creating a site.conf and re-defining configuration parameters there. Any configuration reset in the site.conf file will override those set in the bes.conf file.

By default, there is no site.conf file, and thus Hyrax uses the default configurations.

|

The main advantages of having a separate site.conf file are:

-

The

bes.confis static (unaltered), providing a way to check the default configurations. -

The

site.conffile consolidates all of the used configurations into a single file. This is preferable over making changes across multiple files. -

The

site.conffile persists through Hyrax updates.

To learn how to create and configure such site.conf, along with many examples, jump to Custom Module Configuration subsection below.

4.1.1. Basic format of parameters

One way in which the parameters are set in the BES configuration file, is:

Name=Value1 Name+=Value2

The above assigns both Value1 and Value2 to Name, due to the += operator. If instead of += you have =, then Name would be overwritten in the second line, taking only the value of Value2.

The bes.conf file includes all .conf files in the modules directory with

the following:

BES.Include=modules/.*\.conf$

| Regular expressions can be used in the Include parameter to match a set of files. |

And if you would like to include another configuration file you would use the following:

BES.Include=/path/to/configuration/file/blee.conf

Another way to define configuration parameters, is by using key/value pairs. This applies to many of the parameters avaiable, but not all. For example:

SupportEmail = support@opendap.org BES.ServerAdministrator = email:support@opendap.org BES.ServerAdministrator += organization:OPeNDAP Inc. BES.ServerAdministrator += street:165 NW Dean Knauss Dr. BES.ServerAdministrator += city:Narragansett BES.ServerAdministrator+=region:RI BES.ServerAdministrator+=postalCode:02882 BES.ServerAdministrator+=country:US BES.ServerAdministrator+=telephone:+1.401.575.4835 BES.ServerAdministrator+=website:http://www.opendap.org

| parser for the configuration ignores spaces around the '=' and '+=' operators. |

4.1.2. Custom Module Configuration via site.conf

The site.conf is a special configuration file that persists through Hyrax updates,

and here you can store custom module configurations. Below we provide instructions on how to customize a module’s configuration with site.conf

Configuration Instructions

The following instructions work generally for any way you install Hyrax. In addition, we provide instructions for using site.conf when running Hyrax via Docker.

-

Create an empty

site.confin$prefix\etc\beswith the following command:cd $prefix/etc/bes/ sudo touch site.conf

-

Locate the default

.conffile for the module that you would like to customize in$prefix/etc/bes/modules. -

Copy the configuration parameters that you would like to customize from the module’s configuration file into the

site.conf. -

Save your updates to

site.conf. -

Restart the server.

Included in $prefix/etc/bes/ is a template site.conf file, called site.conf.proto. To take advantage of this template you can simply copy it with the following command cp site.conf.proto site.conf. Then, uncomment the configuration parameters that you want to modify and update them.

|

Instructions When Running Hyrax via Docker

If you will be running Hyrax with Docker, you can first create an empty site.conf file on your machine, and point to it when running hyrax. This will allow the site.conf file to persist through any Hyrax update/restart, and even in accidental removing of Hyrax. For this, follow the (similar) steps as before:

-

Create a local

site.conffile. -

Run Hyrax via Docker adding the following line in Step 2 of the (see Docker Hub installation instructions):

--volume $prefix/path_to_your_configuration/site.conf:/etc/bes/site.conf \

-

Activate’s the docker container’s bash shell, by running:

docker exec -it hyrax bash

This will allow you to navigate the docker container, and therefore Hyrax’s directory.

-

Resume Steps 2-4 in general configuration instructions.

-

Restarting the server is optional / no longer needed. When running the server again, make sure to do Step 2.

Example: Pointing to data

There are two general ways to point to data, which depends on your preferred way to install and run Hyrax. When installing/running Hyrax via Docker, Step 2 describes the instruction to point data to Hyrax. Namely, add the following line to your docker run command:

--volume /full/path/data/root/directory:/usr/share/hyrax

By default, Hyrax will read data from /usr/share/hyrax. The /full/path/data/root/directory should be the root directory of your data catalog.

When installing Hyrax from Source or (pre-compiled) Binaries, you will have to set the value

BES.Catalog.catalog.RootDirectory=/full/path/data/root/directory BES.Data.RootDirectory=/dev/null

in the site.conf.

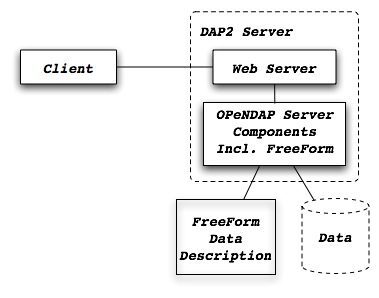

The next step, is to (re)configure any mapping between data source names and data handlers. This is usually taken care of for you already, so you probably won’t have to set this parameter unless you would like to set a new configuration. Each data handler module (netcdf, hdf4, hdf5, freeform, etc…) will have this set depending on the extension of the data files for the data.

For example, in the nc.conf, for the netcdf data handler module, you’ll find the line:

BES.Catalog.catalog.TypeMatch+=nc:.*\.nc(\.bz2|\.gz|\.Z)?$;

When the BES is asked to perform some commands on a particular data

source, it uses regular expressions to figure out which data handler should be used to carry out the commands. The value of the BES.Catalog.catalog.TypeMatch parameter holds the set of regular expressions. The value of this parameter is a list of handlers and expressions in the form handler expression. The regular expressions used by the BES are like those used by grep on Unix and are somewhat cryptic, but once you see the pattern it’s not that bad.

|

For example, in the following 3 examples, the TypeMatch parameter is being told the following:

-

Any data source with a name that ends in .nc should be handled by the netcdf (nc) handler.

BES.Catalog.catalog.TypeMatch+=nc:.*\.nc(\.bz2|\.gz|\.Z)?$;

-

Any file with a .hdf, .HDF, or .eos suffix should be processed using the HDF4 handler (note that case matters)

BES.Catalog.catalog.TypeMatch+=h4:.*\.(hdf|HDF|eos)(\.bz2|\.gz|\.Z)?$;

-

Data sources ending in .dat should use the FreeForm handler.

BES.Catalog.catalog.TypeMatch+=ff:.*\.dat(\.bz2|\.gz|\.Z)?$;

If you fail to configure this correctly, the BES will return error messages stating that the type information has to be provided. It won’t tell you this, however when it starts, only when the OLFS (or some other software) makes a data request. This is because it is possible to use BES commands in place of these regular expressions, although the Hyrax won’t.

NetCDF-4 files and the HDF5 Handler

In the NetCDF example above, although not explicitly, the .nc suffix refers to NetCDF-3 files (i.e. NetCDF classic). NetCDF-3 is an older data model, and as such does not incorporate many of the DataTypes now widely used by the scientific community. As a result, data producers opt to use instead the Enhanced Data Model, i.e. the NetCDF-4. Unfortunately, both NetCDF3 and NetCDF4 data file formats have identical suffix, .nc.

Despite there becoming a common practice to assign the .nc4 suffix to NetCDF4 files, you can expect to find many NetCDF-4 files with a .nc suffix. Since Hyrax’s netcdf handler only covers the NetCDF3 model, any attributes or variable types that are only part of the NetCDF-4 data model will not be properly handled by Hyrax’s data server. At worst, Hyrax will be unable to serve the dataset.

To successfully serve NetCDF4 data, the HDF5 handler should be assigned to any such file. The reason behind this successfull approach is that the NetCDF-4 uses HDF5 library as its backend. However, in the case where your data has both NetCDF3 and NetCDF4, we strongly recommend to rename any NetCDF4 to include the .nc4 suffix. This will facilitate the mapping between NetCDF4 data and HDF5 handler. To find out whether your .nc data file is NetCDF3 or NetCDF4, you can use ncdump.

The mapping assigning the HDF5 handler to any .nc4 file should be defined in the site.conf file as follows:

BES.Catalog.catalog.TypeMatch+=h5:.*\.nc4(\.bz2|\.gz|\.Z)?$;

Below, we provide a concrete example of a site.conf file when serving NetCDF-4 datasets with Groups. Groups are part of both NetCDF4 and HDF5 data models.

Example: Groups in NetCDF4 and HDF5

By default, the Group representation on a dataset is flattened to accomodate CF 1.7 conventions. In addition, the default NC-handler that is used for any .nc4 dataset is based on "Classic NetCDF model" (netCDF-3), which does not incorporate many of the Enhanced NetCDF model (netCDF4) features. As a result, to serve .nc4 data that may contain DAP4 elements not present in DAP2 (see diagram for comparison with DAP2), or serve H5 datasets with unflattened Group representation, one must make the following 2 changes to the default configuration:

-

Set

H5.EnableCF=falseandH5.EnableCFDMR=true. -

Assign the h5 handler when serving

.nc4data via Hyrax.

To enable these changes the site.conf must have the following parameters:

BES.Catalog.catalog.TypeMatch= BES.Catalog.catalog.TypeMatch+=csv:.*\.csv(\.bz2|\.gz|\.Z)?$; BES.Catalog.catalog.TypeMatch+=reader:.*\.(dds|dods|data_ddx|dmr|dap)$; BES.Catalog.catalog.TypeMatch+=dmrpp:.*\.(dmrpp)(\.bz2|\.gz|\.Z)?$; BES.Catalog.catalog.TypeMatch+=ff:.*\.dat(\.bz2|\.gz|\.Z)?$; BES.Catalog.catalog.TypeMatch+=gdal:.*\.(tif|TIF)$|.*\.grb\.(bz2|gz|Z)?$|.*\.jp2$|.*/gdal/.*\.jpg$; BES.Catalog.catalog.TypeMatch+=h4:.*\.(hdf|HDF|eos|HDFEOS)(\.bz2|\.gz|\.Z)?$; BES.Catalog.catalog.TypeMatch+=ncml:.*\.ncml(\.bz2|\.gz|\.Z)?$; BES.Catalog.catalog.TypeMatch+=h5:.*\.(HDF5|h5|he5|H5)(\.bz2|\.gz|\.Z)?$; BES.Catalog.catalog.TypeMatch+=h5:.*\.nc4(\.bz2|\.gz|\.Z)?$; H5.EnableCF=false H5.EnableCFDMR=true

Including and Excluding files and directories

Finally, you can configure the types of information that the BES sends back when a client requests catalog information. The Include and Exclude parameters provide this mechanism, also using a list of regular expressions (with each element of the list separated by a semicolon). In the example below, files that begin with a dot are excluded. These parameters are set in the dap.conf configuration file.

The Include expressions are applied to the node first, followed by the Exclude expressions. For collections of nodes, only the Exclude expressions are applied.

BES.Catalog.catalog.Include=; BES.Catalog.catalog.Exclude=^\..*;

Example: Administrator parameters

The following steps detail how you can update the BES’s server administrator configuration parameters with your organization’s information:

-

Locate the existing server administrator configuration in

/etc/bes/bes.conf:BES.ServerAdministrator=email:support@opendap.org BES.ServerAdministrator+=organization:OPeNDAP Inc. BES.ServerAdministrator+=street:165 NW Dean Knauss Dr. BES.ServerAdministrator+=city:Narragansett BES.ServerAdministrator+=region:RI BES.ServerAdministrator+=postalCode:02882 BES.ServerAdministrator+=country:US BES.ServerAdministrator+=telephone:+1.401.575.4835 BES.ServerAdministrator+=website:http://www.opendap.org

When adding parameters to the ServerAdministrator configuration, notice how, following the first line, we use += instead of just

to add new key/value pairs. += indicates to the BES that we are adding new configuration parameters, rather than replacing those that were already loaded. Had we used just + in the above example, the only configured parameter would have been website. -

Copy the above block of text from its default .conf file to site.conf.

-

In site.conf, update the block of text with your organization’s information; for example…

BES.ServerAdministrator=email:smootchy@woof.org BES.ServerAdministrator+=organization:Mogogogo Inc. BES.ServerAdministrator+=street:165 Buzzknucker Blvd. BES.ServerAdministrator+=city: KnockBuzzer BES.ServerAdministrator+=region:OW BES.ServerAdministrator+=postalCode:00007 BES.ServerAdministrator+=country:MG BES.ServerAdministrator+=telephone:+1.800.555.1212 BES.ServerAdministrator+=website:http://www.mogogogo.org

-

Save your changes to site.conf.

-

Restart the server.

4.1.3. Administration & Logging

In the bes.conf or site.conf file, the BES.ServerAdministrator parameter is the address used in various mail messages returned to clients. Set this so that the email’s recipient will be able to fix problems and/or respond to user questions. Also set the log file and log level. If the BES.LogName is set to a relative path, it will be treated as relative to the directory where the BES is started. (That is, if the BES is installed in /usr/local/bin but you start it in your home directory using the parameter value below, the log file will be bes.log in your home directory.)

BES.ServerAdministrator=webmaster@some.place.edu BES.LogName=./bes.log BES.LogVerbose=no

Because the BES is a server in its own right, you will need to tell it which network port and interface to use. Assuming you are running the BES and OLFS (i.e., all of Hyrax) on one machine, do the following:

User and Group Parameters

In the bes.conf or site.conf file, the BES must be started as root. One of the things that the BES does first is to start a listener that listens for requests to the BES. This listener is started as root, but then the User and Group of the process is set using parameters in the BES configuration file:

BES.User=user_name BES.Group=group_name

You can also set these to a user id and a group id. For example:

BES.User=#172 BES.Group=#14

Setting the Networking Parameters

In the bes.conf or site.conf configuration file, we have settings for how the BES should listen for requests:

BES.ServerPort=10022 # BES.ServerUnixSocket=/tmp/opendap.socket

The BES.ServerPort tells the BES which TCP/IP port to use when listening for commands. Unless you need to use a different port, use the default. Ports with numbers less than 1024 are special, otherwise you can use any number under 65536. That being said, stick with the default unless you know you need to change it.

In the default bes.conf file we have commented the ServerUnixSocket parameter, which disables I/O over that device. If you need UNIX socket I/O, uncomment this line, otherwise leave it commented. The fewer open network I/O ports, the easier it is to make sure the server is secure.

If both ServerPort and ServerUnixSocket are defined, the BES listens on both the TCP port and the Unix Socket. Local clients on the same machine as the BES can use the unix socket for a faster connection. Otherwise, clients on other machines will connect to the BES using the BES.ServerPort value.

| The OLFS always uses only the TCP socket, even if the UNIX socket is present. |

4.1.4. Debugging Tip

In bes.conf, use the BES.ProcessManagerMethod

parameter to control whether the BES acts like a normal Unix server.

The default value of multiple causes the BES to accept many

connections at once, like a typical server. The value single causes it

to accept a single connection (process the commands sent to it and exit),

greatly simplifying troubleshooting.

BES.ProcessManagerMethod=multiple

Controlling how compressed files are treated

Compression parameters are configured in the bes.conf configuration file.

The BES will automatically recognize compressed files using the bz2,

gzip, and Unix compress (Z) compression schemes. However, you need to

configure the BES to accept these file types as valid data by making

sure that the filenames are associated with a data handler. For

example, if you’re serving netCDF files, you would set

BES.Catalog.catalog.TypeMatch so that it includes

nc:.*\.(nc|NC)(\.gz|\.bz2|\.Z)?$;. The first part of the regular

expression must match both the filename and the '.nc' extension, and the second

part must match the suffix, indicating the file is compressed (either .gz,

.bz2 or .Z).

When the BES is asked to serve a file that has been compressed, it first

must decompress it before passing it to the correct data handler (except

for those formats which support 'internal' compression, such as HDF4).

The BES.CacheDir parameter tells the BES where to store the

uncompressed file. Note that the default value of /tmp is probably less

safe than a directory that is used only by the BES for this purpose.

You might, for example, want to set this to <prefix>/var/bes/cache.

The BES.CachePrefix parameter is used to set a prefix for the cached files so that when a directory like /tmp is used, it is easy for the BES to recognize which files are its responsibility.

The BES.CacheSize parameter sets the size of the cache in megabytes. When the size of the cached files exceeds this value, the cache will be purged using a least-recently-used approach, where the file’s access time is the 'use time'. Because it is usually impossible to determine the sizes of data files before decompressing them, there may be times when the cache holds more data than this value. Ideally this value should be several times the size of the largest file you plan to serve.

Loading Software Modules

Virtually all of the BES’s functions are contained in modules that are loaded when the server starts up. Each module is a shared-object library. The configuration for each of these modules is contained in its own configuration file and is stored in a directory called modules. This directory is located in the same directory as the bes.conf file: $prefix/etc/bes/modules/.

By default, all .conf files located in the modules are loaded by the BES per this parameter in the bes.conf configuration file:

BES.Include=modules/.*\.conf$

So, if you don’t want one of the modules to be loaded, simply change its name to, say, nc.conf.sav and it won’t be loaded.

For example, if you are installing the general purpose server module (the dap-server module) then a dap-server.conf file will be installed in the modules directory. Also, most installations will include the dap module, allowing the BES to serve OPeNDAP data. This configuration file, called dap.conf, is also included in the modules directory. For a data handler, say netcdf, there will be an nc.conf file located in the modules directory.

Each module should contain within it a line that tells the BES to load the module at startup:

BES.modules+=nc BES.module.nc=/usr/local/lib/bes/libnc_module.so

Module specific parameters will be included in its own configuration file. For example, any parameters specific to the netcdf data handler will be included in the nc.conf file.

Symbolic Links

If you would like symbolic links to be followed when retrieving data and for viewing catalog entries, then you need to set the following two parameters: the BES.FollowSymLinks parameter and the BES.RootDirectory parameter. The BES.FollowSymLinks parameter is for non-catalog containers and is used in conjunction with the BES.RootDirectory parameter. It is not a general setting. The BES.Catalog.catalog.FollowSymLinks is for catalog requests and data containers in the catalog. It is used in conjunction with the BES.Catalog.catalog.RootDirectory parameter above. The default is set to No in the installed configuration file. To allow for symbolic links to be followed you need to set this to Yes.

The following is set in the bes.conf file:

BES.FollowSymLinks=No|Yes

And this one is set in the dap.conf file in the modules directory:

BES.Catalog.catalog.FollowSymLinks=No|Yes

Parameters for Specific Handlers

Parameters for specific modules can be added to the BES configuration file for that specific module. No module-specific parameters should be added to bes.conf.

4.2. OLFS Configuration

The OLFS is the outward facing component of the Hyrax server. This section provides OLFS configuration instructions.

| The OLFS web application relies on one or more instances of the BES to provide it with data access and basic catalog metadata. |

The OLFS web application stores its configuration state in a number of files. You can change the server’s default configuration by modifying the content of one or more of these files and then restarting Tomcat or the web application. These configuration files include the following:

-

olfs.xml: Contains the primary OLFS configuration, such as BES associations, directory view instructions, gateway service location, and static THREDDS catalog behavior. Located at/etc/olfs/olfs.xml. For more information aboutolfs.xml, please see theolfs.xmlconfiguration section. -

catalog.xml: Master(top-level) THREDDS catalog content for static THREDDS catalogs. Located at/etc/olfs/catalog.xml. -

viewers.xml: Contains the localized viewers configuration. Located at/etc/olfs/viewers.xml.

Generally, you can meet your configuration needs by making changes to

olfs.xml and catalog.xml. For more information about where these files

might be located, please see the following section, OLFS Configuration Files.

4.2.1. OLFS Configuration Files

If the default configuration of the OLFS works for your intended use, there is no need to create a persistent localized configuration; however, if you need to change the configuration, we strongly recommend that you enable a persistent local configuration. This way, updating the web application won’t override your custom configuration.

The OLFS locates its configuration file by looking at the value of

the OLFS_CONFIG_DIR user environment variable:

-

If the variable is set and its value is the pathname of an existing directory that is both readable and writable by Tomcat, the OLFS will use it.

-

If the directory

/etc/olfsexists and is readable and writable by Tomcat, the OLFS will use it. -

If the directory

/usr/share/olfsexists and is readable and writable by Tomcat, then the OLFS will use it. (This was added for Hyrax 1.14.1.)

If none of the above directories exist or the variable has not been set,

the OLFS uses the default configuration bundled in the web application

web archive file (opendap.war). In this way, the OLFS can start

without a persistent local configuration.

4.2.2. Create a Persistent Local Configuration

You can easily enable a persistent local configuration for the OLFS by

creating an empty directory and identifying it with the

OLFS_CONFIG_DIR environment variable:

export OLFS_CONFIG_DIR="/home/tomcat/hyrax"

Alternately, you can create /etc/olfs or /usr/share/olfs.

Once you have created the directory (and, in the first case, set the environment variable), restart Tomcat. Restarting Tomcat prompts the OLFS move a copy of its default configuration into the empty directory and then use it. You can then edit the local copy.

| The directory that you create must be both readable and writable by the user who is running Tomcat. |

4.2.3. olfs.xml Configuration File

The olfs.xml file contains the core configuration of the Hyrax

front-end service. The following subsections detailed its contents.

At the document’s root is the <OLFSConfig> element. It contains several

elements that supply the configuration for the OLFS.

The following is an example OLFS Configuration file:

<?xml version="1.0" encoding="UTF-8"?>

<OLFSConfig>

<BESManager>

<BES>

<prefix>/</prefix>

<host>localhost</host>

<port>10022</port>

<timeOut>300</timeOut>

<maxResponseSize>0</maxResponseSize>

<ClientPool maximum="200" maxCmds="2000" />

</BES>

<NodeCache maxEntries="20000" refreshInterval="600"/>

<SiteMapCache refreshInterval="600" />

</BESManager>

<ThreddsService prefix="thredds" useMemoryCache="true" allowRemote="true" />

<GatewayService prefix="gateway" useMemoryCache="true" />

<!-- DEPRECATED UseDAP2ResourceUrlResponse / -->

<DatasetUrlResponse type="download"/>

<DataRequestForm type="dap4" />

<!-- AllowDirectDataSourceAccess / -->

<HttpPost enabled="true" max="2000000"/>

<!-- AddFileoutTypeSuffixToDownloadFilename / -->

<!-- PreloadNcmlIntoBes -->

<!-- CatalogCache>

<maxEntries>10000</maxEntries>

<updateIntervalSeconds>10000</updateIntervalSeconds>

</CatalogCache -->

<!--

'Bot Blocker' is used to block access from specific IP addresses

and by a range of IP addresses using a regular expression.

-->

<!-- BotBlocker -->

<!-- <IpAddress>127.0.0.1</IpAddress> -->

<!-- This matches all IPv4 addresses, work yours out from here.... -->

<!-- <IpMatch>[012]?\d?\d\.[012]?\d?\d\.[012]?\d?\d\.[012]?\d?\d</IpMatch> -->

<!-- Any IP starting with 65.55 (MSN bots the don't respect robots.txt -->

<!-- <IpMatch>65\.55\.[012]?\d?\d\.[012]?\d?\d</IpMatch> -->

<!-- /BotBlocker -->

<!--

'Timer' enables or disables the generation of internal timing metrics for the OLFS

If commented out the timing is disabled. If you want timing metrics to be output

to the log then uncomment the Timer and set the enabled attribute's value to "true"

WARNING: There is some performance cost to utilizing the Timer.

-->

<!-- Timer enabled="false" / -->

</OLFSConfig><BESManager> Element (required)

The BESManager information is used whenever the software needs to access the BES’s services. This configuration is key to the function of Hyrax, for in it is defined each BES instance that is connected to a given Hyrax installation. The following examples will show a single BES example. For more information on configuring Hyrax to use multiple BESs look here.

Each BES is identified using a seperate <BES> child element inside

of the <BESManager> element:

<BESManager>

<BES>

<prefix>/</prefix>

<host>localhost</host>

<port>10022</port>

<timeOut>300</timeOut>

<maxResponseSize>0</maxResponseSize>

<ClientPool maximum="10" maxCmds="2000" />

</BES>

<NodeCache maxEntries="20000" refreshInterval="600"/>

<SiteMapCache cacheFile="/tmp/SiteMap.cache" refreshInterval="600" />

</BESManager><BES> Child Elements

The <BES> child elements provide the OLFS with connection and control

information for a BES. There are three required child elements

within a <BES> element and four optional child elements:

-

<prefix>element (required): This element contains the URL prefix that the OLFS will associate with this BES. It also maps this BES to the URI space that the OLFS services.The prefix is a token that is placed between the

host:port/context/part of the Hyrax URL and the catalog root. The catalog root is used to designate a particular BES instance in the event that multiple BESs are available to a single OLFS.If you have maintained the default configuration of a single BES, the tag must be designated by a forward slash:

<prefix>/</prefix>.There must be at least one BESelement in theBESManagerhandler configuration whose prefix has a value of/. There may be more than one<BES>, but only this one is required.When using multiple BESs, each BES must have an exposed mount point as a directory (aka collection) in the URI space where it is going to appear. It is important to note that the prefix string must always begin with the slash (

/) character:<prefix>/data/nc</prefix>. For more information, see Configuring With Multiple BESs. -

<host>element (required): Contains the host name or IP address of the BES, such as<host>test.opendap.org</host>. -

<port>element (required): Contains port number on which the BES is listening, such as<port>10022</port>. -

<timeOut>element (optional): Contains the timeout time, in seconds, for the OLFS to wait for this BES to respond, such as<timeOut>600</timeOut>. Its default value is 300. -

<maxResponseSize>element (optional): Contains in bytes the maximum response size allowed for this BES. Requests that produce a larger response will receive an error. Its default value of zero indicates that there is no imposed limit:<maxResponseSize>0</maxResponseSize>. -

<ClientPool>element (optional): Configures the behavior of the pool of client connections that the OLFS maintains with this particular BES. These connections are pooled for efficiency and speed:<ClientPool maximum="200" maxCmds="2000" />.Notice that this element has two attributes,

maximumandmaxCmds:-

The

maximumattribute specifies the maximum number of concurrent BES client connections that the OLFS can make. Its default value is 200. -

The

maxCmdsattribute specifies the maximum number of commands that can be issued over a particularBESClientconnection. The default is 2000.

-

If the <ClientPool> element is missing, the pool (maximum) size defaults

to 200 and maxCmds defaults to 2000.

<NodeCache> Child Element (optional)

The NodeCache element controls the state of the in-memory LRU cache for BES

catalog/node responses. It has two attributes, refreshInterval and

maxEntries.

The refreshInterval attribute specifies the time (in seconds) that any

particular item remains in the cache. If the underlying system has a lot of

change (model result output etc) then making this number smaller will increase

the rate at which the change becomes "available" through the Hyrax service, at

the expense of more cache churn and slower responses. If the underlying system

is fairly stable (undergoes little change) then refreshInterval can be larger

which will mean less cache churn and faster responses.

The maxEntries attribute defines the maximum number of entries to allowed in

the cache. If the serviced collection is large then making this larger will

definitely improve response times for catalogs etc.

Example:

<NodeCache maxEntries="20000" refreshInterval="600"/><SiteMapCache> Child Element (optional)

The SiteMapCache element defines the location and life span of the SiteMap

response cache. A cache for the BES SiteMap response can be time consuming to

produce for larger systems (~4 minutes for a system with 110k directories and

560k files) This configuration element addresses this by providing a location

and refresh interval for a SiteMap cache. SiteMapCache has two attributes,

cacheFile and refreshInterval.

The optional cacheFile attribute may be used to identify a particular location

for the SiteMap cache file, if not provided it will be placed by default into

cache directory located in the active OLFS configuration directory.

The refreshInterval attribute expresses, in seconds, the time that a SiteMap

is held in the cache before the system generates a new one.

Example:

<SiteMapCache cacheFile="/tmp/SiteMap.cache" refreshInterval="600" /><ThreddsService> Element (optional)

This configuration parameter controls the following:

-

The location of the static THREDDS catalog root in the URI space serviced by Hyrax.

-

Whether the static THREDDS catalogs are held in memory or read from disk for each request.

-

If the server will broker remote THREDDS catalogs and their data by following

thredds:catalogReflinks that point to THREDDS catalogs on other systems.

The following is an example configuration for the <ThreddsService> element:

<ThreddsService prefix="thredds" useMemoryCache="true" allowRemote="false" />Notice that <ThreddsService> has several attributes:

-

prefixattribute (optional): Sets the name of the static THREDDS catalogs' root in Hyrax. For example, if the prefix isthredds, thenhttp://localhost:8080/opendap/thredds/will give you the top-level static catalog, which is typically the contents of the file/etc/olfs/opendap/catalog.xml. This attribute’s default value isthredds.

-

useMemoryCacheattribute (optional): This is a boolean value with a default value oftrue.-

If the value of this attribute is set to

true, the servlet will ingest all of the static catalog files at startup and hold their contents in memory, which is faster but more memory intensive. -

If set to

false, each request for a static THREDDS catalog will cause the server to read and parse the catalog from disk, which is slower but uses less memory.

See this page for more information about the memory caching operations.

-

-

allowRemoteattribute (optional): If this attribute is present and its value is set totrue, then the server will "broker" remote THREDDS catalogs and the data that they serve. This means that the server, not the client, will perform the following steps:-

Retrieve the remote catalogs.

-

Render them for the requesting client.

-

Provide an interface for retrieving the remote data.

-

Allow Hyrax to perform any subsequent processing before returning the result to the requesting client.

This attribute has a default value of

false. -

<GatewayService> (optional)

Directs requests to the Gateway Service:

<GatewayService prefix="gateway" useMemoryCache="true" />The following are the attributes of <GatewayService>:

-

prefixattribute (optional): Sets location of the gateway service in the URI space serviced by Hyrax. For example, if the prefix isgateway, thenhttp://localhost:8080/opendap/gateway/should give you the Gateway Service page. This attribute’s default value isgateway. -

useMemoryCacheattribute (optional): See the previous section for more information.

<UseDAP2ResourceUrlResponse /> element (DEPRECATED)

The UseDAP2ResourceUrlResponse key has been deprecated.

Use DatasetUrlResponse and DataRequestForm to determine what kind of

response Hyrax will return for the dataset URL.

This element controls the type of response that Hyrax will provide to a client’s request for the data resource URL:

<UseDAP2ResourceUrlResponse />

When this element is present, the server will respond to

requests for data resource URLs by returning the DAP2 response

(either an error or the underlying data object). Commenting out or removing

the <UseDAP2ResourceUrlResponse /> element will cause the server to return the

DAP4 DSR response when a dataset resource URL is requested.

NOTE: DAP2 responses are not clearly defined by any specification, whereas DAP4 DSR responses are well-defined by a specification.

This element has no attributes or child elements and is enabled by default.

<DatasetUrlResponse type="download|requestForm|dsr"/>

The DatasetUrlResponse configuration element is used to configure the type of response

that the server will generate when a client attempts to access the Dataset URL.

The type of response is controlled by the value of the type attribute.

There are three supported values are: dsr, download, and requestForm.

-

download- If the configuration parameter AllowDirectDataSourceAccess is set (present) then the source data file will be returned for the dataset URL. If the configuration parameter AllowDirectDataSourceAccess is not present then a 403 forbidden will be returned for the dataset URL. (This is basically a file retrieval service, any constraint expression submitted with the dataset URL will be ignored.) -

requestForm- The Hyrax Data Request Form Page will be returned for the dataset URL. -

dsr- The dap4 DSR response will be returned for the dataset URL.

The default value is download:

<DatasetUrlResponse type="download"/><DataRequestForm type="dap2|dap4"/>

The value of the DataRequestForm element defines these server behaviors:

-

The DAP centric view of the catalog pages. This value controls if the catalog pages are of the DAP2 or DAP4 form. The "blue-bar" catalog(catalog.html) pages (catalog.html) for the preferred DAP data model contain links specifically associated with that data model. This includes the link to the Data Request Form.

-

This element also determines the type of Data request form page returned when the

DatasetUrlResponsetype is set torequestFormand the request is for the Dataset URL the request will be redirected to the DAP2 or DAP4 Data Request form.

Supported type values are: dap2 and dap4

The default value is dap4:

<DataRequestForm type="dap4" /><AllowDirectDataSourceAccess/> element (optional)

The <AllowDirectDataSourceAccess/> element controls the user’s

ability to directly access data sources via the Hyrax web interface:

<!-- AllowDirectDataSourceAccess / -->If this element is present and not commented out, a client can retrieve an entire data source (such as an HDF file) by requesting it through the HTTP URL interface.

This element has no attributes or child elements and is disabled by default. We recommend that you leave it unchanged, unless you want users to be able to circumvent the OPeNDAP request interface and have direct access to the data products stored on your server.

<ForceDataRequestFormLinkToHttps/> element (optional)

'ForceDataRequestFormLinkToHttps' - The presence of this element will cause the Data Request Form interfaces to "force" the dataset URL to HTTPS. This is useful for situations where the sever is sitting behind a connection management tool (like CloudFront) whose outward facing connections are HTTPS but Hyrax is not using HTTPS. Thus the internal URLs being received by Hyrax are on HTTP. When these URLs are exposed via the Data Request Forms they can cause some clients issues with session dropping because the protocols are not consistent.

<ForceDataRequestFormLinkToHttps /><AddFileoutTypeSuffixToDownloadFilename /> element (optional)

This optional element controls how the server constructs the download file name that is transmitted in the HTTP Content-Disposition header:

<AddFileoutTypeSuffixToDownloadFilename />For example, suppose the <AddFileoutTypeSuffixToDownloadFilename /> element is

either commented out or not present. When a user requests a data response from

somedatafile.hdf in netCDF-3 format, the HTTP Content-Disposition header will be set

like this:

Content-Disposition: attachment; filename="somedatafile.hdf"

However, if the <AddFileoutTypeSuffixToDownloadFilename /> is present, then the

resulting response will have an HTTP Content-Disposition header:

Content-Disposition: attachment; filename="somedatafile.hdf.nc"

By default the server ships with this disabled.

<BotBlocker> (optional)

This optional element can be used to block access from specific IP addresses or a range of IP addresses using regular expressions:

<BotBlocker>

<IpAddress>128.193.64.33</IpAddress>

<IpMatch>65\.55\.[012]?\d?\d\.[012]?\d?\d</IpMatch>

</BotBlocker><BotBlocker> has the following child elements:

-

<IpAddress>element: The text value of this element should be the IP address of a system that you would like to block from accessing your service. For example,<IpAddress>128.193.64.33</IPAddress>Will block the system located at128.193.64.33from accessing your server.There can be zero or more

<IpAddress>child elements in the<BotBlocker>element. -

<IpMatch>element: The text value of this element should be the regular expression that will be used to match the IP addresses of clients attempting to access Hyrax. For example,<IpMatch>65\.55\.[012]?\d?\d\.[012]?\d?\d</IpMatch>matches all IP addresses beginning with65.55, and thus blocks access for clients whose IP addresses lie in that range.There can be zero or more

<IpMatch>child elements in<BotBlockerelement.

Developer Options

These configuration options are intended to be used by developers that are engaged in code developement for components of Hyrax. They are not meant to be enabled in any kind of production environment. They are included here for transparency and to help potential contributors to the Hyrax project.

<Timer>

The <Timer> attribute enables or disables the generation of internal timing metrics for the OLFS:

<Timer enabled="true"/>

Timer has a single attribute, enabled, which is a boolean value.

Uncommenting this value and setting it to true will output timing metrics to the log.

Enabling the Timer will impose significant performance overhead on the

server’s operation and should ony be done in an effort to understand the

relative times spent in different operations--not as a mechanism for

measuring the server’s objective performance.

|

<ingestTransformFile> child element (developer)

This child element of the ThreddsService element is a special code development option

that allows a developer to specify the fully qualified path to an XSLT file that will be

used to preprocess each THREDDS catalog file read from disk:

Example:

<ingestTransformFile>/fully/qualified/path/to/transfrm.xsl</ingestTransformFile>The default version of this file, found in

$CATALINA_HOME/webapps/opendap/xsl/threddsCatalogIngest.xsl,

processes the thredds:datasetScan elements in each THREDDS catalog

so that they contain specific content for Hyrax.

4.2.4. Viewers Service (viewers.xml file)

The Viewers service provides, for each dataset, an HTML page that contains

links to Java WebStart applications and to WebServices, such as WMS and WCS,

that can be used in conjunction with the dataset. The Viewers

service is configured via the contents of the viewers.xml file, typically located

at the following location: /etc/olfs/viewers.xml.

viewers.xml Configuration File

The viewers.xml contains a list of two types of elements:

-

<JwsHandler>elements -

<WebServiceHandler>elements

The details of these are discussed elsewhere in the documentation. The following is an example configuration:

<ViewersConfig>

<JwsHandler className="opendap.webstart.IdvViewerRequestHandler">

<JnlpFileName>idv.jnlp</JnlpFileName>

</JwsHandler>

<JwsHandler className="opendap.webstart.NetCdfToolsViewerRequestHandler">

<JnlpFileName>idv.jnlp</JnlpFileName>

</JwsHandler>

<JwsHandler className="opendap.webstart.AutoplotRequestHandler" />

<WebServiceHandler className="opendap.viewers.NcWmsService" serviceId="ncWms">

<applicationName>Web Mapping Service</applicationName>

<NcWmsService href="/ncWMS/wms" base="/ncWMS/wms" ncWmsDynamicServiceId="lds" />

</WebServiceHandler>

<WebServiceHandler className="opendap.viewers.GodivaWebService" serviceId="godiva">

<applicationName>Godiva WMS GUI</applicationName>

<NcWmsService href="http://localhost:8080/ncWMS/wms" base="/ncWMS/wms" ncWmsDynamicServiceId="lds"/>

<Godiva href="/ncWMS/godiva2.html" base="/ncWMS/godiva2.html"/>

</WebServiceHandler>

</ViewersConfig>4.2.5. Logging

For information about logging, see the Hyrax Logging Configuration Documentation.

4.2.6. Authentication and Authorization

The following subsections detail authentication and authorization.

Apache Web Server (httpd)

If your organization desires secure access and authentication layers for Hyrax, the recommended method is to use Hyrax in conjunction the Apache Web Server (httpd).

Most organizations that use secure access and authentication for their web presence are already doing so via Apache Web Server, and Hyrax can be integrated nicely with this existing infrastructure.

More about integrating Hyrax with Apache Web Server can be found at these pages:

Tomcat

Hyrax may be used with the security features implemented by Tomcat for authentication and authorization services. We recommend that you read carefully and understand the Tomcat security documentation.

For Tomcat 7.x see:

For Tomcat 8.5.x see:

We also recommend that you read chapter 12 of the Java Servlet Specification 2.4 that decribes how to configure security constraints at the web-application-level.

Tomcat security requires fairly extensive additions to the web.xml

file located here: ${CATALINA_HOME}/webapps/opendap/WEB-INF/web.xml

Altering the <servlet> definitions may render your Hyrax

server inoperable.

|

Examples of security content for the web.xml file can be found in the

persistent content directory of the Hyrax server, which by default is

located here $CATALINA_HOME/webapps/opendap/WEB-INF/conf/TomcatSecurityExample.xml

Limitations

Tomcat security officially supports context-level authentication. This means that you can restrict access to the collection of servlets running in a single web application (i.e. all of the stuff that is defined in a single web.xml file). You can call out different authentication rules for different `<url-pattern>`s within the web application, but only clients that do not cache ANY security information will be able to easily access the different areas.

For example, in your web.xml file you might have the following:

<security-constraint>

<web-resource-collection>

<web-resource-name>fnoc1</web-resource-name>

<url-pattern>/hyrax/nc/fnoc1.txt</url-pattern>

</web-resource-collection>

<auth-constraint>

<role-name>fn1</role-name>

</auth-constraint>

</security-constraint>

<security-constraint>

<web-resource-collection>

<web-resource-name>fnoc2</web-resource-name>

<url-pattern>/hyrax/nc/fnoc2.txt</url-pattern>

</web-resource-collection>

<auth-constraint>

<role-name>fn2</role-name>

</auth-constraint>

</security-constraint>

<login-config>

<auth-method>BASIC</auth-method>

<realm-name>MyApplicationRealm</realm-name>

</login-config>Where the security roles fn1 and fn2 (defined in the tomcat-users.xml file) have no common members.

The complete URI’s would be…

http://localhost:8080/mycontext/hyrax/nc/fnoc1.txt http://localhost:8080/mycontext/hyrax/nc/fnoc2.txt

This works for clients that do not cache anything; however, if you access

these URLs with a typical internet browser, authenticating one URI

would lock you out of the other URI until you "reset" the browser by

purging all caches.

This happens, because, in the exchange between Tomcat and the

client, Tomcat sends the header

WWW-Authenticate: Basic realm="MyApplicationRealm",

and the client authenticates.

When you access the second URI, Tomcat sends the same authentication challenge

with the same WWW-Authenticate header. The client, having recently authenticated to

this realm-name (defined in the <login-config> element in the

web.xml file - see above), resends the authentication information, and,

since it is not valid for that url pattern, the request is denied.

Persistence

Be sure to back up your modified web.xml file to a location outside of the $CATALINA_HOME/webapps/opendap directory, as newly-installed versions of Hyrax will overwrite it.

You could, for example, use an XML ENTITY and an entity reference in the web.xml.

This will cause a local file containing the security configuration to be included in the

web.xml. For example…

-

Add the ENTITY

[<!ENTITY securityConfig SYSTEM "file:/fully/qualified/path/to/your/security/config.xml">]to the !DOCTYPE declaration at the top of the web.xml.

-

Add an entity reference (securityConfig, as above) to the content of the web-app element. This would cause your externally held security configuration to be included in the web.xml file.

. The following is an example ENTITY configuration:

<?xml version="1.0" encoding="ISO-8859-1"?>

<!DOCTYPE web-app

PUBLIC "-//Sun Microsystems, Inc.//DTD Web Application 2.2//EN"

"http://java.sun.com/j2ee/dtds/web-app_2_2.dtd"

[<!ENTITY securityConfig SYSTEM "file:/fully/qualified/path/to/your/security/config.xml">]

>

<web-app>

<!--

Loads a persistent security configuration from the content directory.

This configuration may be empty, in which case no security constraints will be

applied by Tomcat.

-->

&securityConfig;

.

.

.

</web-app>This will not prevent you from losing your web.xml file when a new version of Hyrax is installed, but adding the ENTITY to the new web.xml file is easier than remembering an extensive security configuration.

4.2.7. Compressed Responses and Tomcat

Many OPeNDAP clients accept compressed responses. This can greatly increase the efficiency of the client/server interaction by diminishing the number of bytes actually transmitted over "the wire." Tomcat provides native compression support for the GZIP compression mechanism; however, it is NOT turned on by default.

The following example is based on Tomcat 7.0.76. We recommend that you carefully read the Tomcat documentation related to this topic before proceeding:

-

Tomcat 7.x documentation for the HTTP Connector (see Standard Implementation section)

-

Tomcat 8.5.x documentation for the HTTP/1.1 Connector(see Standard Implementation section)

Details

To enable compression, you will need to edit the $CATALINA_HOME/conf/server.xml file.

Locate the <Connector> element associated with your server. It is typically

the only <Connector> element whose port attribute is set equal to 8080.

You will need to add or change several of its attributes to enable compression.

With our Tomcat 7.0.76 distribution, we found this default <Connector>

element definition in our server.xml file:

<Connector

port="8080"

protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443"

/>You will need to add four attributes:

compression="force"

compressionMinSize="2048"

compressableMimeType="text/html,text/xml,text/plain,text/css,text/javascript,application/javascript,application/octet-stream,application/vnd.opendap.dap4.dataset-services+xml,application/vnd.opendap.dap4.dataset-metadata+xml,application/vnd.opendap.dap4.data,application/vnd.opendap.dap4.error+xml,application/json,application/prs.coverage+json,application/rdf+xml,application/x-netcdf;ver=4,application/x-netcdf,image/tiff;application=geotiff"The list of compressible MIME types includes all known response types for Hyrax.

The compression attribute may have the following values:

-

compression="no": Nothing is compressed (default if not provided). -

compression="yes": Only the compressible MIME types are compressed. -

compression="force": Everything gets compressed (assuming the client accepts gzip and the response is bigger than compressionMinSize).You must set compression="force"for compression to work with the OPeNDAP data transport.

When you are finished, your <Connector> element should look like the following:

<Connector

port="8080"

protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443"

compression="force"

compressionMinSize="2048"

compressableMimeType="text/html,text/xml,text/plain,text/css,text/javascript,application/javascript,application/octet-stream,application/vnd.opendap.dap4.dataset-services+xml,application/vnd.opendap.dap4.dataset-metadata+xml,application/vnd.opendap.dap4.data,application/vnd.opendap.dap4.error+xml,application/json,application/prs.coverage+json,application/rdf+xml,application/x-netcdf;ver=4,application/x-netcdf,image/tiff;application=geotiff"

/>Restart Tomcat for these changes to take effect.

You can verify the change by using curl as follows:

curl -H "Accept-Encoding: gzip" -I http://localhost:8080/opendap/data/nc/fnoc1.nc.ascii

| The above URL is for Hyrax running on your local system and accessing a dataset that ships with the server. |

You’ll know that compression is enabled if the response to the curl command contains:

Content-Encoding: gzip

| If you are using Tomcat in conjunction with the Apache Web Server (our friend httpd) via AJP, you will need to also configure Apache to deliver compressed responses Tomcat will not compress content sent over the AJP connection. |

4.2.8. Pitfalls with CentOS-7.x and/or SELinux

SELinux (bundled by default with CentOS-7) will create some new challenges for those not familiar with the changes it brings to the system environment. For one, Tomcat runs as a confined user. Here we’ll examine how these changes affect the OLFS.

Localizing the OLFS Configuration under SELinux

When using a yum-installed Tomcat on CentOS-7.x (or any other Linux

environment that is essentially an SELinux variant), neither the /etc/olfs

or the /usr/share/olfs configuration locations will work without taking extra steps.

You must alter the SELinux access policies to give the Tomcat user

permission to read and write to one of these directories.

The following code block will configures the /usr/share/olfs directory for reading

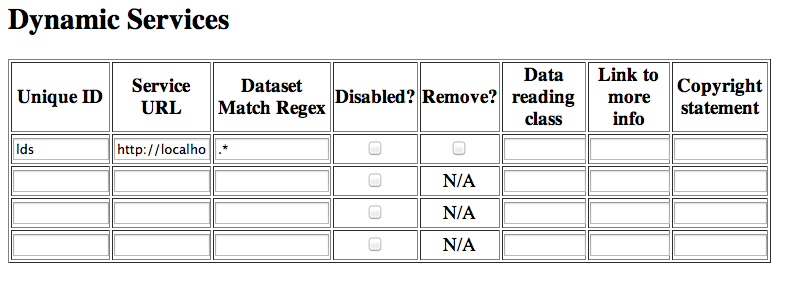

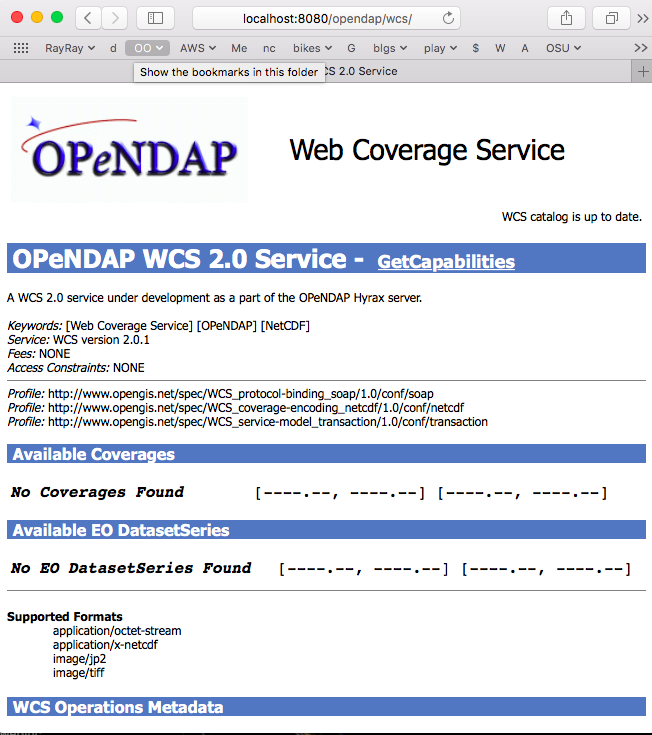

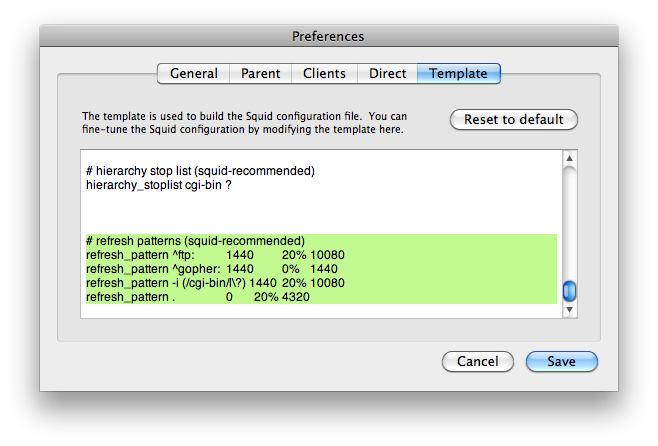

and writing by the Tomcat user: